Chapter 1: The ML Landscape Map

Understanding the big picture: How machine learning techniques connect and build upon each other

The Big Picture Problem

When you first learn machine learning, it feels like being dropped into a foreign city without a map. You encounter dozens of algorithms, techniques, and concepts that seem completely unrelated:

The Confusion You're Experiencing

- Linear Regression - Simple line fitting

- L1/L2 Regularization - Mysterious penalty terms

- Decision Trees - Rule-based splitting

- Random Forest - Voting trees?

- XGBoost - The "champion" algorithm

- Bagging vs Boosting - What's the difference?

How do these all fit together? When do you use what?

Our Solution: The ML Roadmap

This tutorial provides the roadmap you've been missing. Instead of learning algorithms in isolation, you'll understand their relationships, when each technique was invented to solve specific problems, and how they build upon each other.

Quick Self-Assessment

Rate your current understanding (1-5 scale):

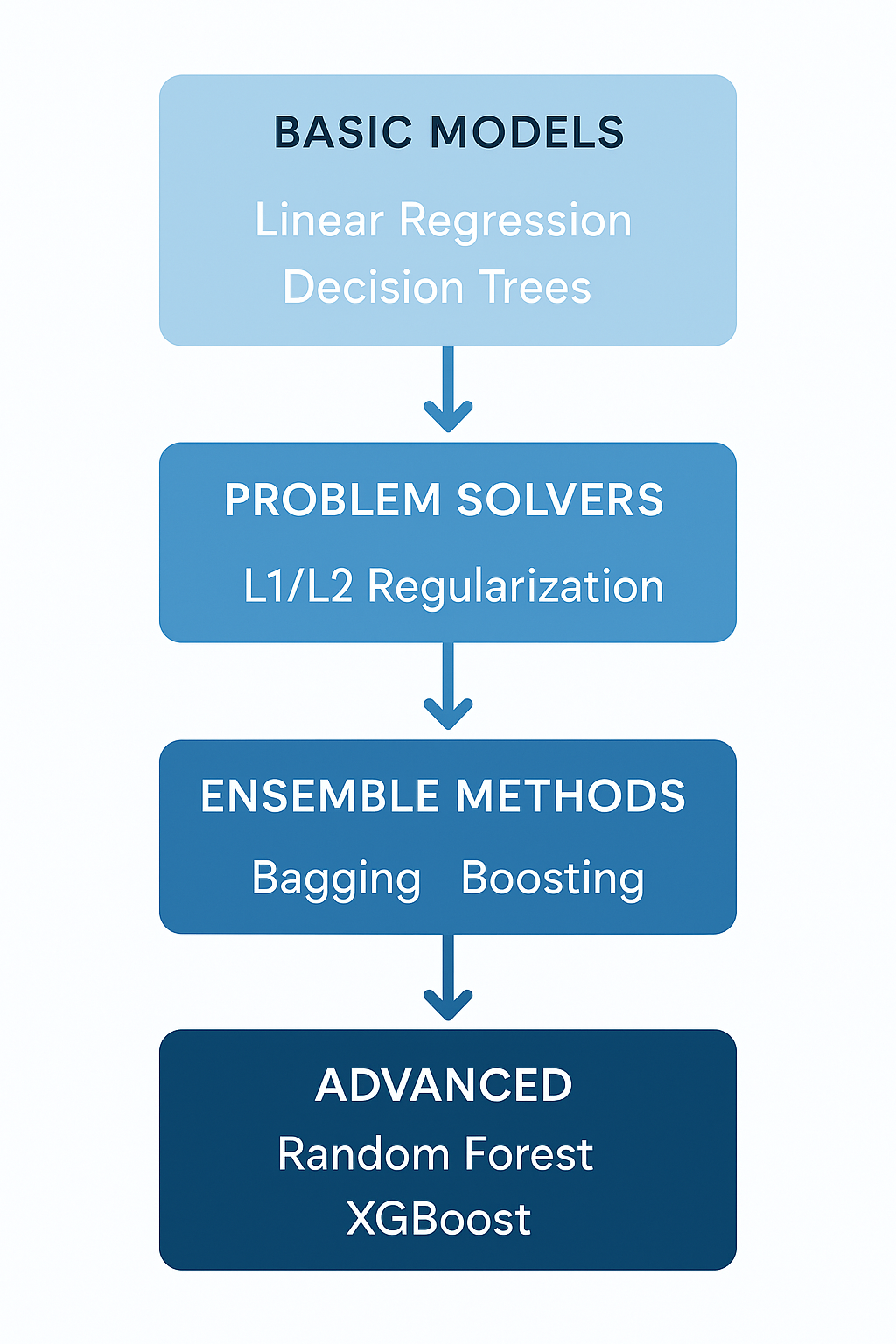

The ML Technique Hierarchy

Machine learning techniques follow a natural progression. Each level solves problems created by the previous level:

Click the image to explore each level interactively

Level 1: Basic Models

Linear Regression & Decision Trees

Simple, interpretable algorithms that form the foundation of ML.

Problem: They overfit to training data

Level 2: Problem Solvers

L1/L2 Regularization

Techniques that prevent overfitting by adding penalty terms.

Problem: Single models still have limitations

Level 3: Ensemble Methods

Bagging & Boosting

Combine multiple models to improve performance.

Evolution: Random Forest, Gradient Boosting

Level 4: Advanced Implementations

XGBoost & Modern Variants

Optimized implementations with superior performance.

Result: State-of-the-art predictive models

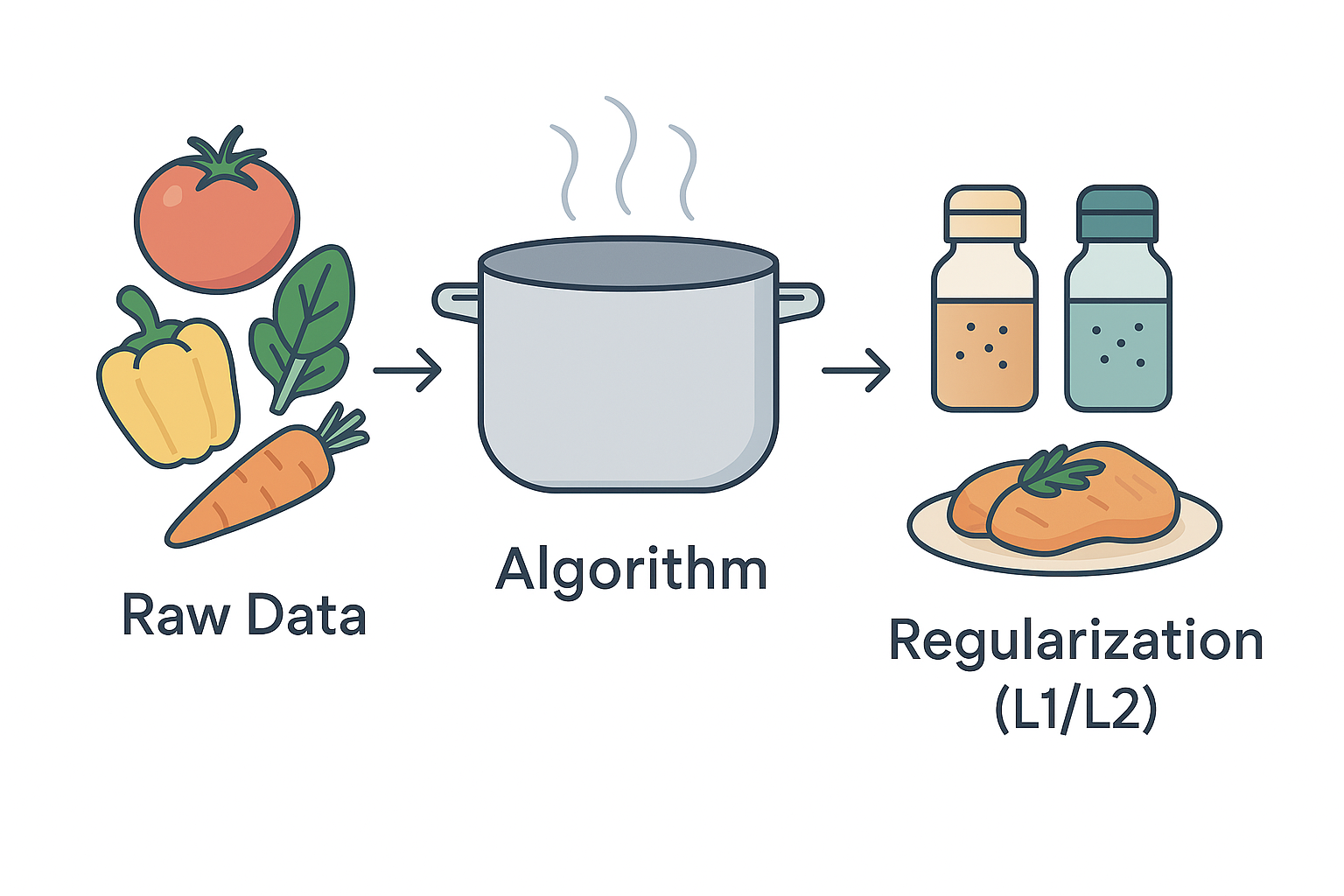

The Cooking Metaphor

Think of machine learning like cooking. This analogy will help you understand the relationships between different techniques:

The ML Cooking Process

Features, labels, observations - the foundation of everything

Linear regression, decision trees - how you process the data

L1/L2 penalties - fine-tune the flavor and prevent "oversalting"

Multiple chefs (models) collaborate for the perfect dish

Build Your ML Recipe

Select ingredients to see what ML techniques you'll need:

Click a step to learn more

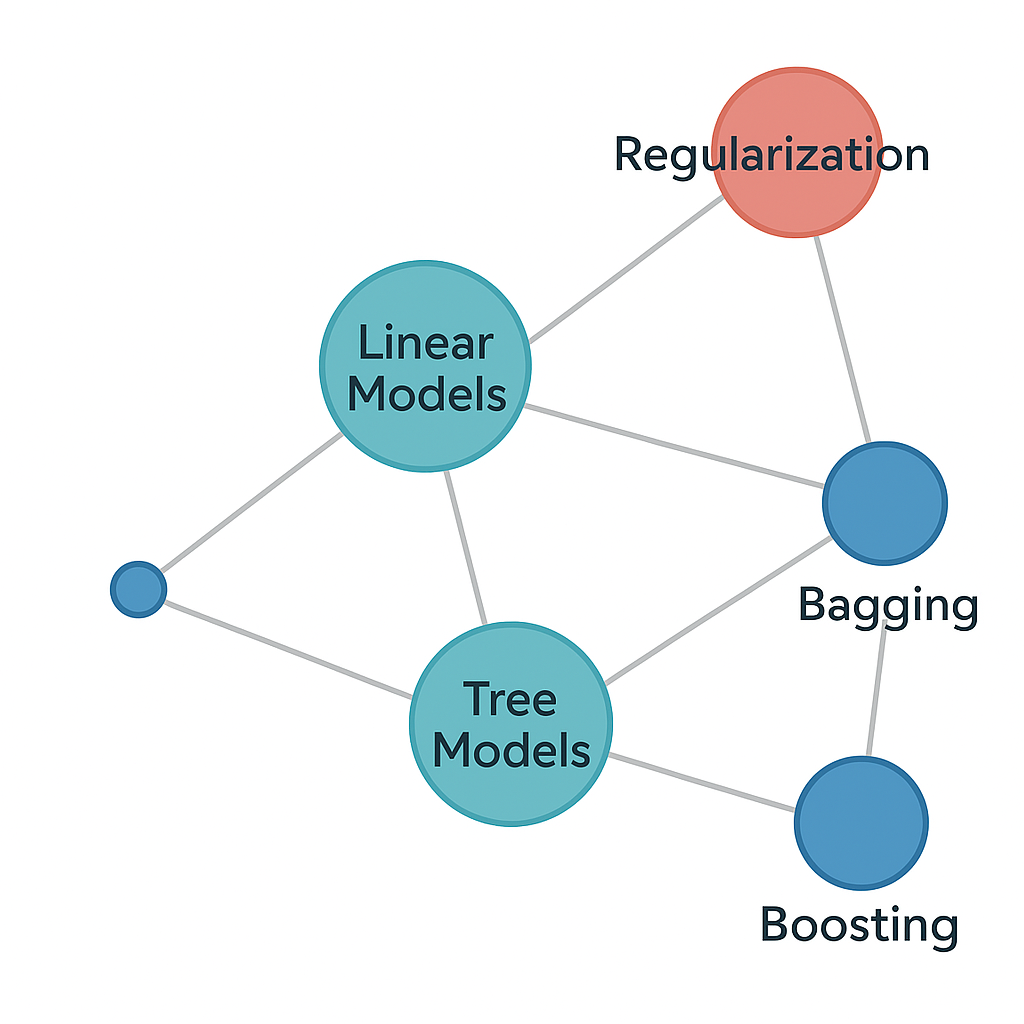

The Connection Web

ML techniques don't exist in isolation. They form a web of relationships where each technique builds on others:

Explore the Connections

Click on any technique to see its relationships:

Linear Models

Connects to: L1/L2 Regularization, Ensemble Methods

Relationship: Forms the base learner in many ensemble methods. Regularization was invented to solve linear model overfitting.

Evolution: Linear → Regularized Linear → Linear in Ensembles

Decision Trees

Connects to: Random Forest, Gradient Boosting, XGBoost

Relationship: The building block of most ensemble methods. Trees are combined through bagging or boosting.

Evolution: Single Tree → Forest of Trees → Optimized Tree Ensembles

Regularization

Connects to: All models (Linear, Trees, Ensembles)

Relationship: Universal technique to prevent overfitting. Applied differently across algorithm families.

Forms: L1/L2 for Linear, Pruning for Trees, Early Stopping for Boosting

Bagging

Connects to: Decision Trees (Random Forest), Any base algorithm

Relationship: Trains multiple models on different data samples, then averages predictions.

Key Innovation: Reduces variance through model averaging

Boosting

Connects to: Weak learners (usually Trees), XGBoost evolution

Relationship: Sequential training where each model fixes previous model errors.

Key Innovation: Reduces bias through iterative error correction

Random Forest

Connects to: Decision Trees + Bagging + Feature Randomness

Relationship: Combines tree power with bagging stability and feature selection.

Innovation: Added random feature selection to reduce tree correlation

XGBoost

Connects to: Gradient Boosting + Optimization + Regularization

Relationship: Optimized implementation of boosting with advanced regularization.

Innovation: Mathematical optimization + Systems engineering + Built-in regularization

Chapter 1 Quiz

Test your understanding of ML technique relationships: