The Journey We've Taken

Throughout this tutorial, we've explored the relationships between different ML techniques. Now let's put it all together with a practical framework for choosing the right algorithm.

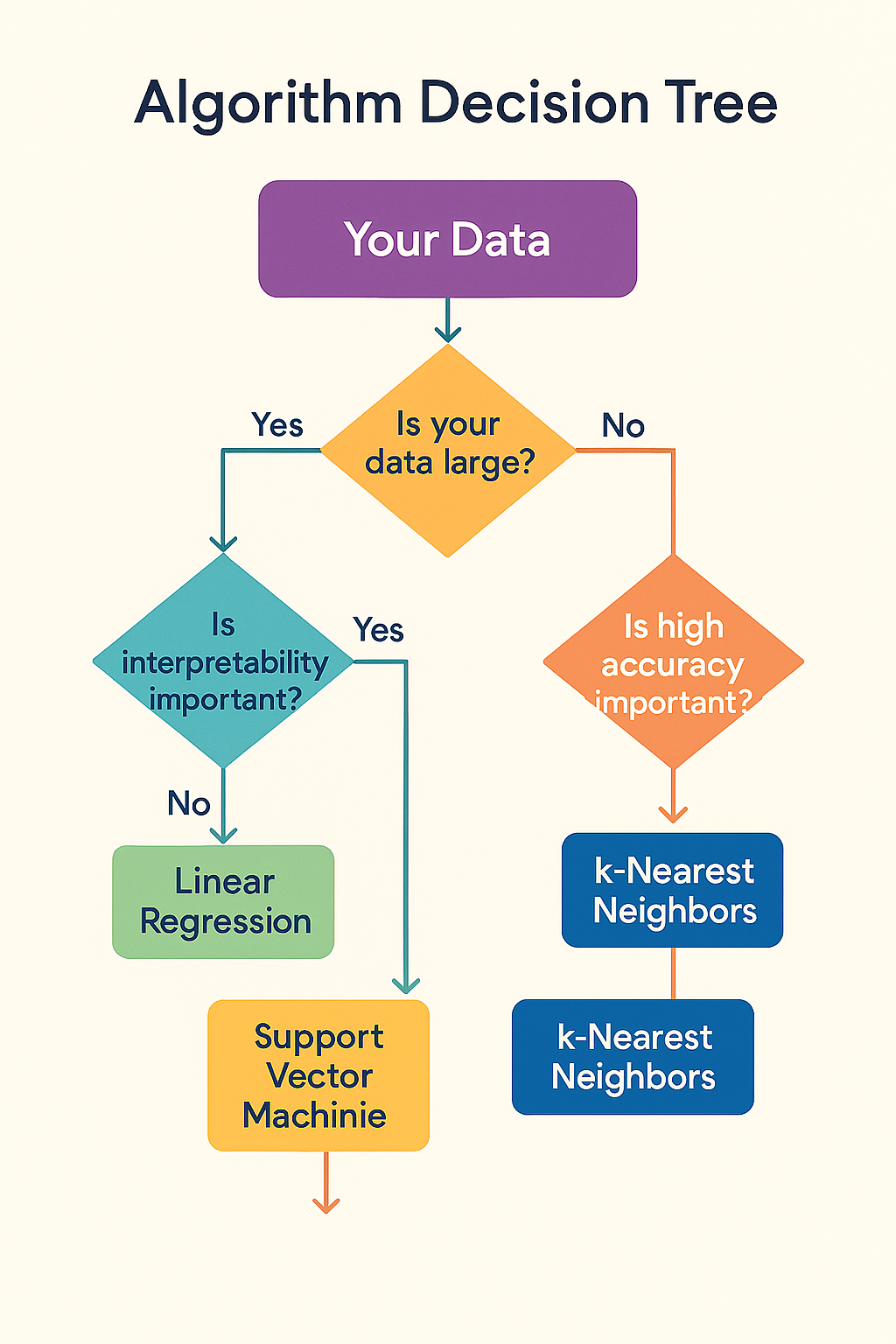

Interactive Algorithm Selection Tree

Answer questions about your problem to get personalized algorithm recommendations:

Start Here: What type of data do you have?

Key Decision Factors

📊 Data Type & Size

- Tabular data: Tree-based methods excel

- Large datasets: Consider scalability

- Small datasets: Avoid complex models

- Missing values: XGBoost handles automatically

🎯 Problem Requirements

- Accuracy priority: XGBoost or Gradient Boosting

- Interpretability: Decision Trees or Linear models

- Speed: Random Forest for balance

- Stability: Random Forest for robustness

⚡ Resource Constraints

- Limited time: Random Forest (less tuning)

- Limited compute: Linear models

- Memory constraints: Avoid deep ensembles

- Real-time inference: Consider model size

🔧 Maintenance & Deployment

- Production stability: Well-tested algorithms

- Model updates: Consider retraining cost

- Monitoring: Feature importance tracking

- Scalability: Cloud-native solutions

Real-World Case Studies

Explore how different algorithms solve real business problems:

🎯 Interactive Case Studies

Click on a case study to see the algorithm selection process in action:

💳 Credit Risk Assessment

Problem: Bank needs to predict loan defaults with 95%+ accuracy

Data: 50K customers, mixed numerical/categorical features

Solution: XGBoost

✓ Handles mixed data types

✓ High accuracy requirement

✓ Feature importance for regulations

✓ Missing value handling

🏥 Medical Diagnosis

Problem: Diagnose disease from patient symptoms with explainable results

Data: 5K patients, interpretability critical

Solution: Decision Tree + Random Forest Ensemble

✓ Interpretable decision rules

✓ Small dataset size

✓ Feature importance clear

✓ Medical professionals can understand

🚀 Startup Customer Prediction

Problem: Limited data, need quick results, tight budget

Data: 2K customers, fast iteration needed

Solution: Random Forest

✓ Works well with small data

✓ Minimal hyperparameter tuning

✓ Quick to implement and iterate

✓ Robust against overfitting

📡 IoT Sensor Monitoring

Problem: Real-time anomaly detection, resource constraints

Data: Streaming data, edge computing limitations

Solution: Regularized Linear Model

✓ Fast inference required

✓ Limited computational resources

✓ Streaming data compatibility

✓ Low memory footprint

Implementation Guide

Step-by-step guide to implementing your chosen algorithm in production:

From Prototype to Production

1

Data Preparation

- Clean and validate data

- Handle missing values

- Feature engineering

- Train/validation/test split

2

Algorithm Selection

- Use decision tree framework

- Consider constraints

- Baseline with simple model

- Test multiple candidates

3

Hyperparameter Tuning

- Cross-validation setup

- Grid/random search

- Bayesian optimization

- Early stopping

4

Model Validation

- Multiple metrics evaluation

- Cross-validation

- Out-of-time testing

- Feature importance analysis

Chapter 8: Final Mastery Quiz

Test your complete understanding of ML model relationships and selection:

Question 1: Algorithm Selection for Production System

You have a tabular dataset with 100K rows, mixed data types, and need maximum accuracy for a production system. Which algorithm should you choose?

Linear Regression - too simple for complex patterns

Decision Tree - likely to overfit without ensembling

Random Forest - good but XGBoost typically achieves higher accuracy

XGBoost - optimal for large tabular data with mixed types and accuracy requirements

Correct! XGBoost is ideal for this scenario: it excels on tabular data, handles mixed data types automatically, includes built-in regularization, and typically achieves the highest accuracy on structured data problems.

Question 2: Medical Diagnosis System Requirements

For a medical diagnosis system where doctors need to understand the decision process, which approach is most appropriate?

XGBoost with default settings

Decision Tree or shallow Random Forest - with feature importance analysis

Deep Gradient Boosting with 1000+ trees

Highly regularized linear model only

Exactly! Medical applications require interpretability. Decision Trees provide clear decision paths, and shallow Random Forests offer both interpretability and improved robustness while maintaining explainable feature importance.

Question 3: Random Forest vs Gradient Boosting Trade-off

What is the most important factor when choosing between Random Forest and Gradient Boosting?

Random Forest is always better for large datasets

Trade-off between accuracy needs and overfitting risk/tuning complexity

Gradient Boosting is always more accurate

Random Forest only works with numerical data

Perfect! The key trade-off is accuracy vs complexity: Gradient Boosting can achieve higher accuracy but requires more careful tuning and has higher overfitting risk. Random Forest is more robust and stable with minimal tuning.

Question 4: Evolution of Ensemble Methods

Which sequence best represents the evolution from simple to advanced ensemble methods?

Linear Models → Decision Trees → Random Forest → Gradient Boosting → XGBoost

Decision Trees → Linear Models → XGBoost → Random Forest

XGBoost → Gradient Boosting → Random Forest → Decision Trees

Random Forest → Decision Trees → Linear Models → XGBoost

Excellent! This sequence shows the natural progression: starting with simple linear models, moving to single decision trees, then parallel ensembles (Random Forest), sequential ensembles (Gradient Boosting), and finally optimized implementations (XGBoost).

Question 5: Bias-Variance Trade-off Understanding

Which statement best describes the bias-variance trade-off in the algorithms we studied?

All algorithms have the same bias-variance characteristics

Linear models have high bias/low variance, decision trees have low bias/high variance

Ensemble methods always increase both bias and variance

XGBoost eliminates the bias-variance trade-off completely

Correct! Linear models are consistent but potentially too simple (high bias, low variance), while decision trees are flexible but unstable (low bias, high variance). Ensemble methods help balance this trade-off.

Question 6: Regularization Purpose

What is the primary purpose of L1 and L2 regularization in machine learning?

To make models train faster

To prevent overfitting by penalizing model complexity

To increase model accuracy on training data

To make models more interpretable

Right! Regularization techniques like L1 (Lasso) and L2 (Ridge) add penalties to the loss function to prevent the model from becoming too complex and overfitting to the training data.

Question 7: Ensemble Method Advantages

Why do ensemble methods typically outperform single models?

They always use more data

They are faster to train

They combine multiple perspectives to reduce individual model weaknesses

They require less hyperparameter tuning

Exactly! Ensemble methods work by combining multiple models that may make different errors, thereby reducing the impact of individual model weaknesses and improving overall performance.

Question 8: Random Forest Key Features

What are the two key techniques that make Random Forest effective?

Gradient descent and regularization

Bootstrap sampling and feature randomness

Sequential training and residual learning

L1 and L2 regularization

Perfect! Random Forest uses bootstrap sampling to create diverse datasets for each tree and feature randomness to ensure trees make different splits, reducing correlation between trees.

Question 9: Gradient Boosting Learning Process

How does gradient boosting differ from random forest in its learning approach?

It uses deeper trees

It trains models sequentially, each learning from previous errors

It uses more data

It requires more computational power

Correct! Gradient boosting trains models sequentially, with each new model specifically trained to correct the residual errors of the previous models, while Random Forest trains trees independently in parallel.

Question 10: When to Use Linear Models

In which scenario would you choose a linear model over ensemble methods?

When you need maximum accuracy

When you have very large datasets

When you need fast inference and interpretability with limited computational resources

When your data has many missing values

Excellent! Linear models are ideal when you need fast inference and interpretability with limited computational resources. They train quickly, make predictions instantly, and provide clear coefficient interpretations.

Question 11: XGBoost Competitive Advantages

What makes XGBoost superior to traditional gradient boosting?

It uses different algorithms

System optimizations, built-in regularization, and automatic missing value handling

It only works with large datasets

It doesn't require hyperparameter tuning

Right! XGBoost improves upon gradient boosting with parallel processing, cache optimization, built-in L1/L2 regularization, automatic missing value handling, and advanced tree pruning techniques.

Question 12: Feature Importance Analysis

Why is feature importance analysis crucial in ensemble methods?

It makes models train faster

It reduces overfitting automatically

It helps understand model behavior and identify the most influential variables

It eliminates the need for data preprocessing

Perfect! Feature importance helps understand which variables drive model predictions, enables feature selection, supports model interpretation, and can reveal insights about the underlying problem domain.

Question 13: Cross-Validation Purpose

What is the primary benefit of using cross-validation in model selection?

It makes models more accurate

It provides more reliable estimates of model performance on unseen data

It reduces training time

It eliminates the need for a test set

Exactly! Cross-validation uses multiple train/validation splits to get a more robust estimate of how the model will perform on unseen data, reducing the impact of any single validation set's characteristics.

Question 14: Hyperparameter Tuning Strategy

What is the recommended approach for hyperparameter tuning?

Always use random search

Tune all parameters simultaneously from the start

Start with default parameters, then systematic tuning (grid search, then random search)

Only tune learning rate

Correct! Best practice is to establish a baseline with default parameters, then systematically tune parameters starting with the most impactful ones, using grid search for few parameters and random search for many parameters.

Question 15: Production Deployment Considerations

What is the most critical factor when deploying ML models to production?

Using the most complex model possible

Achieving 100% accuracy on training data

Monitoring model performance and data drift over time

Never updating the model once deployed

Excellent! Production models need continuous monitoring for performance degradation, data drift, and changing patterns. Without monitoring and updating, even the best models will deteriorate over time as real-world conditions change.